Complete Technical SEO Audit [+ Free Audit Checklist]

This is Part 2 of our step-by-step guide to On-Page SEO. Part 1 covers the content elements whereas this guide covers technical SEO.

Technical SEO is part of your overall on-page SEO strategy, including elements such as site speed, site architecture, 404 errors, and more.

We consider Local SEO to be a variant of all three main types of SEO.

Let’s get started!

Before we begin, download this swipe file that includes your 17-Step Site Audit Checklist and other free goodies if you want to go deeper.

We’ve included bonus on-page SEO techniques to help demystify information architecture, also known as your site’s navigation, and show you how to use the included information architecture-keyword planning spreadsheet.

Download it here:

Note: You may need assistance from your developer for some of this work.

Using Tools To Audit

This guide will cover the technical components of your on-page SEO strategy, plus a free checklist to guide your auditing.

SEO Tools

Google Search Console (Free)

SEMRush (You can use a limited free account or upgrade to paid)

This section has a detailed workflow for using SEMRush to run your own Audit. You can run a site audit using the free tool, but if you plan to do your own audits long-term, you will want to upgrade to access more tools.

To set up a Site Audit, you must first create a Project for the domain. Once you have your new project, select the “Set up” button in the Site Audit block of your Project interface. Check out this video below to get started.

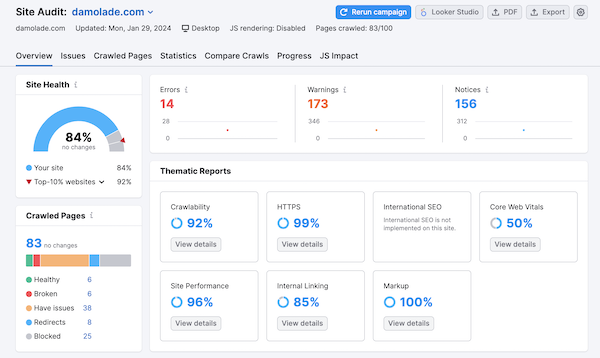

The Site Audit tool in SEMRush can scan your website and provide data about all the pages it can crawl. The report it generates will help you identify various technical SEO issues.

Create your project in SEMRush and walk through the configuration steps, then hit “Start Site Audit.”

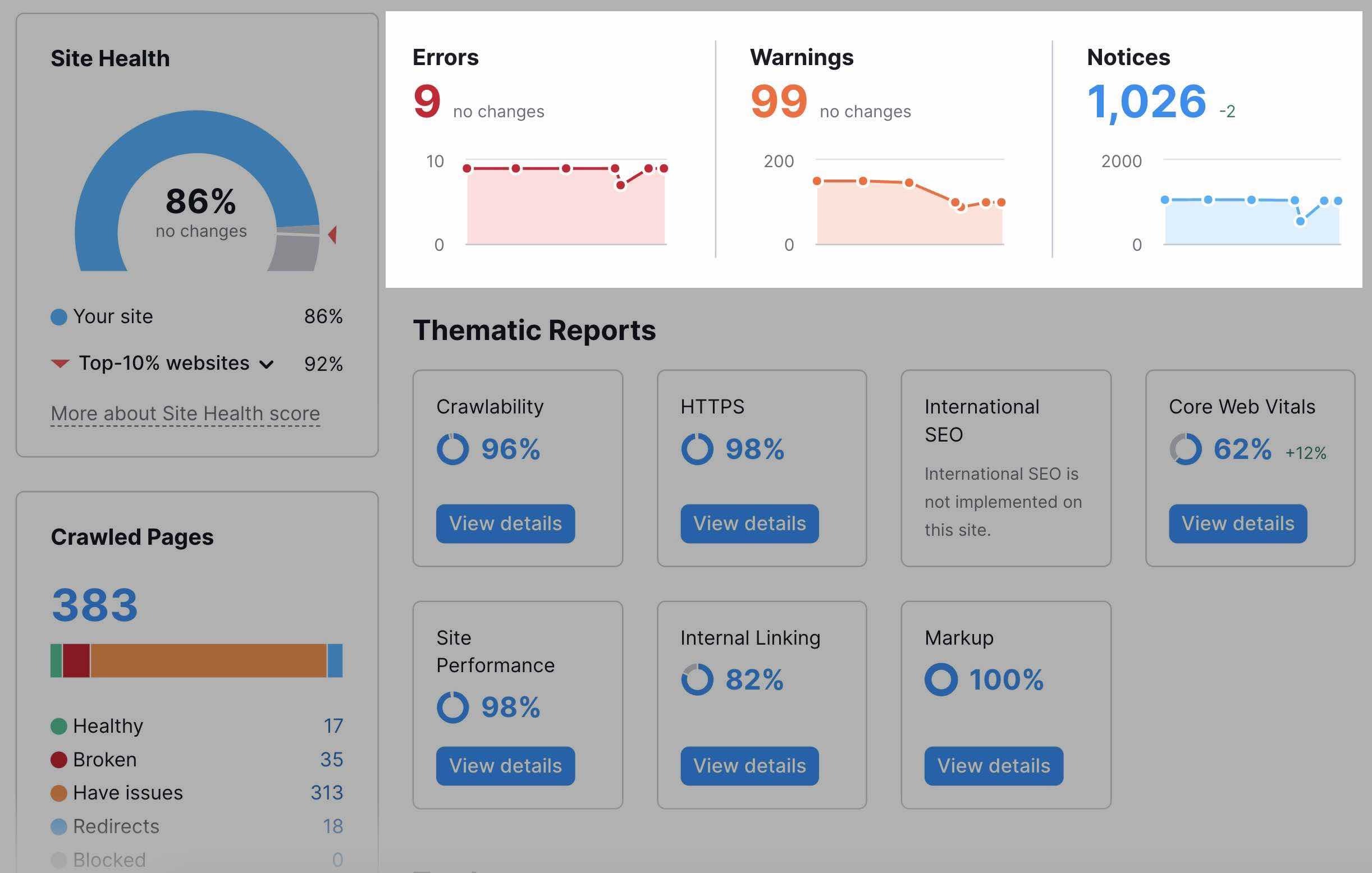

After the tool crawls your site, it generates an overview of your site’s health with the Site Health metric.

Once you get your Site Health score, you will also get additional windows that will provide benchmarking metrics, and from there, you can determine what to work on first.

Next, we’ll determine what to prioritize from your technical SEO audit results.

Crawlability

Structure Your Content and Create a Site Map

If you don’t have an XML sitemap created, this is a great first step in getting your site into optimal shape. Your website’s navigation is the visual representation of your XML sitemap. But in SEO, crawlers read your sitemap to determine the order in which your information is presented to users and search engines.

Your visitors want to find what they’re looking for fast. Otherwise, they’ll bounce on over to your competitor’s website. Create a hierarchy that flows, makes logical sense, and gets visitors to where they want to go—ideally, to where they’ll complete a conversion.

Here’s an example of a well-organized website hierarchy from Google:

Your menu should be simple to navigate and present options a potential buyer would expect.

How to Create and Submit a Sitemap

A sitemap is created in an XML file format. Most content management platforms and many plugins will provide an XML sitemap for you. Yoast, a popular SEO plugin for WordPress sites, provides one automatically. Or you can use Google XML Sitemaps to generate one.

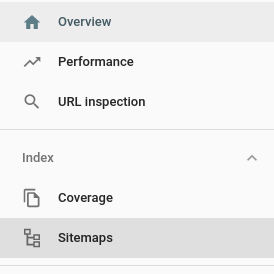

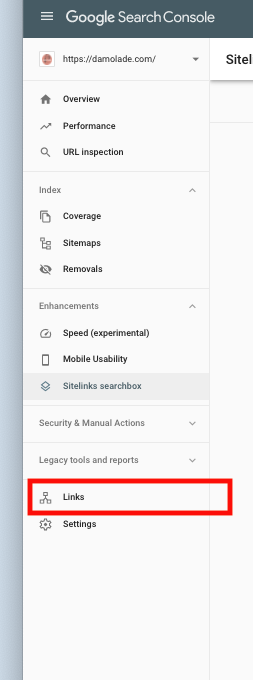

Once your sitemap is downloaded, you must submit it to search engines. To do this in Google, follow these steps:

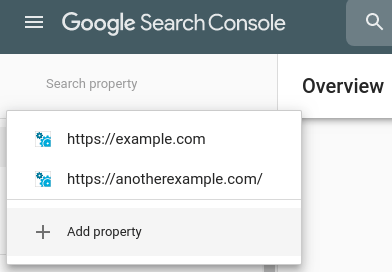

Sign in to Google Search Console. (GSC)

- In the sidebar, select your website.

- Click on ‘Sitemaps.’ The ‘Sitemaps’ menu is under the ‘Index’ section. If you do not see ‘Sitemaps’, click on ‘Index’ to expand the section.

- Remove outdated or invalid sitemaps (if any) like sitemap.xml

- Enter ‘sitemap_index.xml’ in the ‘Add a new sitemap’ field to complete the sitemap URL.

5. Click Submit.

The same should be done in Bing Webmaster Tools.

Review your website sitemap every month. If you’ve made changes to your site, generate an updated version and re-submit it to Google Search Console and Bing Webmaster Tools.

Add Your Sitemap to Your Robots.txt File

You’ll also need to add sitemap locations to your robots.txt file. A robots.txt file tells search engine crawlers which pages or files the crawler can or can’t request from your site. Choosing which pages crawlers have access to is also called indexing, covered more extensively below.

Once your sitemap and robots.txt are captured in Google Search Console (GSC), you can manage most aspects of your technical SEO from the GSC dashboard. If you plan to do monthly SEO audits and technical SEO site maintenance, we highly recommend investing in software such as SEMRush.

In the next section, we will show you how you can create custom workflows to help manage all aspects of your technical SEO. If you are not a technical person who likes to edit backend files, you should hire a reputable SEO agency or a developer to help you.

Understanding Indexing

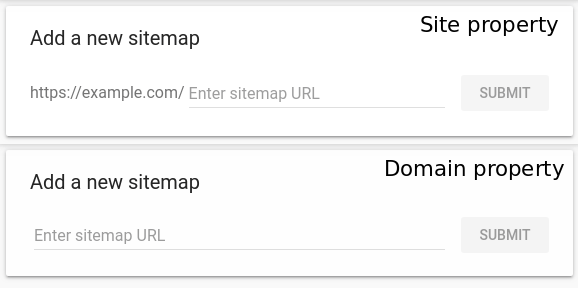

Once you’ve submitted your sitemap to Google and Bing, expect that search engines will begin indexing the pages of your site to show up in search results.

When a search engine like Google indexes your website, it stores and organizes the content found during the crawling process. Once a page is in the index, it’s in the running to be displayed as a search result for relevant user queries. Example search result for my brand name query:

Ideally, 90% or more of your website should be indexed, but it takes some time for search engines to catch up to newly published pages. That’s why keeping your sitemap and robot.txt files updated is so important.

However, there are also elements of your site you likely don’t want to be indexed, especially anything that could be considered “duplicate content” from what’s already on your site. This might be elements like category pages or cannibalized pages.

Choosing what is and is not indexed on your site will look different for each CMS (WordPress, Squarespace, etc.). Check out their help center for more information.

Use Secure Protocol (HTTPS)

Your website should be using an HTTPS protocol (as opposed to HTTP, which is not encrypted).

An SSL certificate means your site domain appears as a “https://www….” rather than a “http://www….” It demonstrates that your site is secure to viewers. Here’s more information on how to secure your website. This is what it looks like when you have an SSL:

What’s more, HTTPS is a confirmed Google ranking signal.

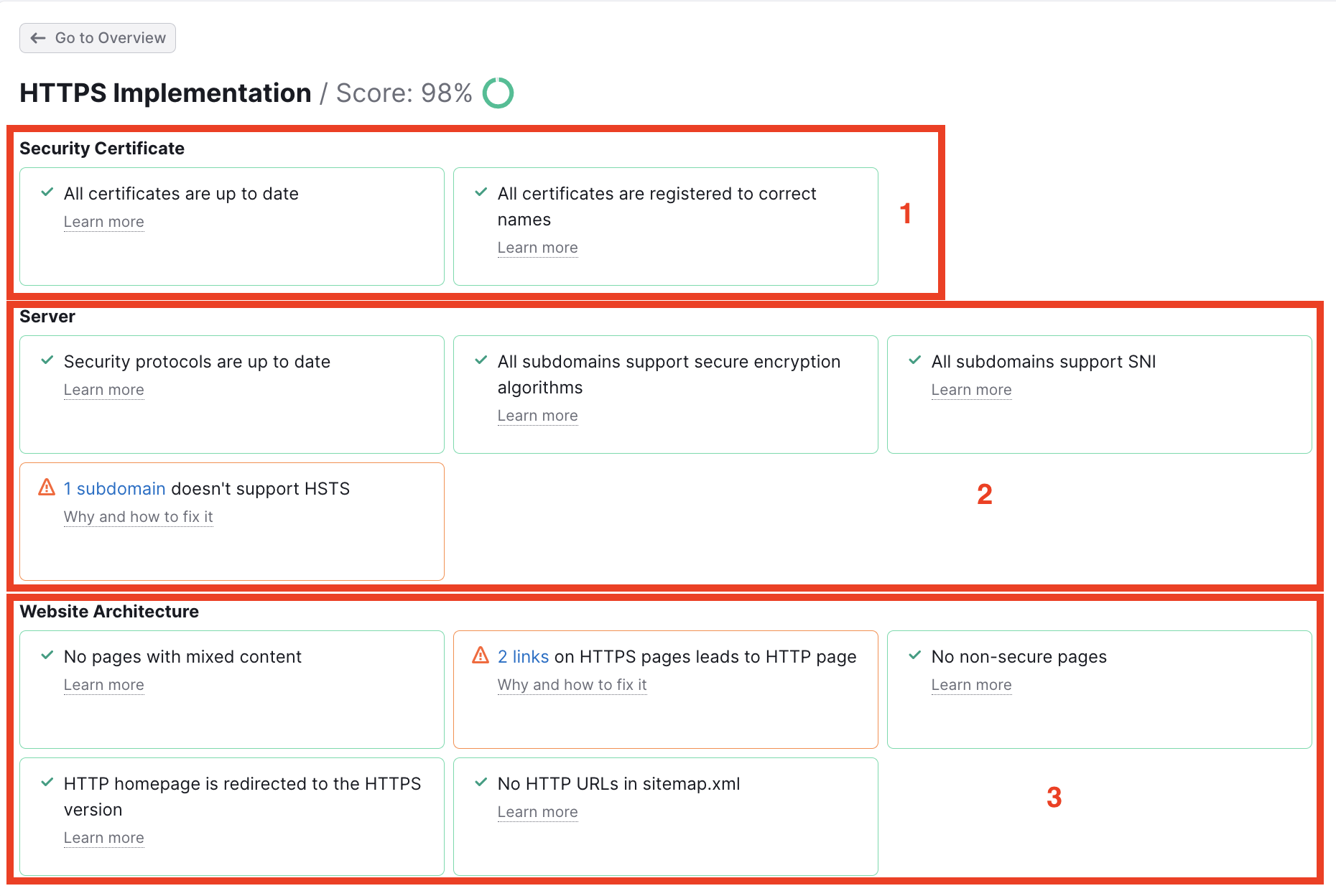

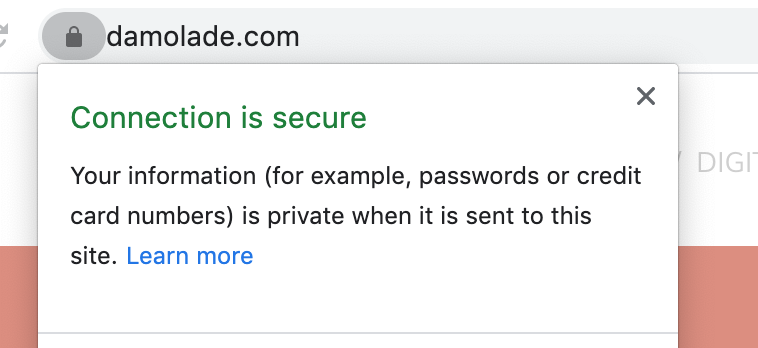

Fixing HTTPS issues isn’t complicated. Again, it will take time to resolve these on your own and may require the assistance of a technical support person. In SEMRush, the HTTPS Implementation report will provide a report on all of the potential issues surrounding certificate registration (1), server support (2), and website architecture (3). Just click on one of the blocks for an explanation of what the issue is and how to fix it.

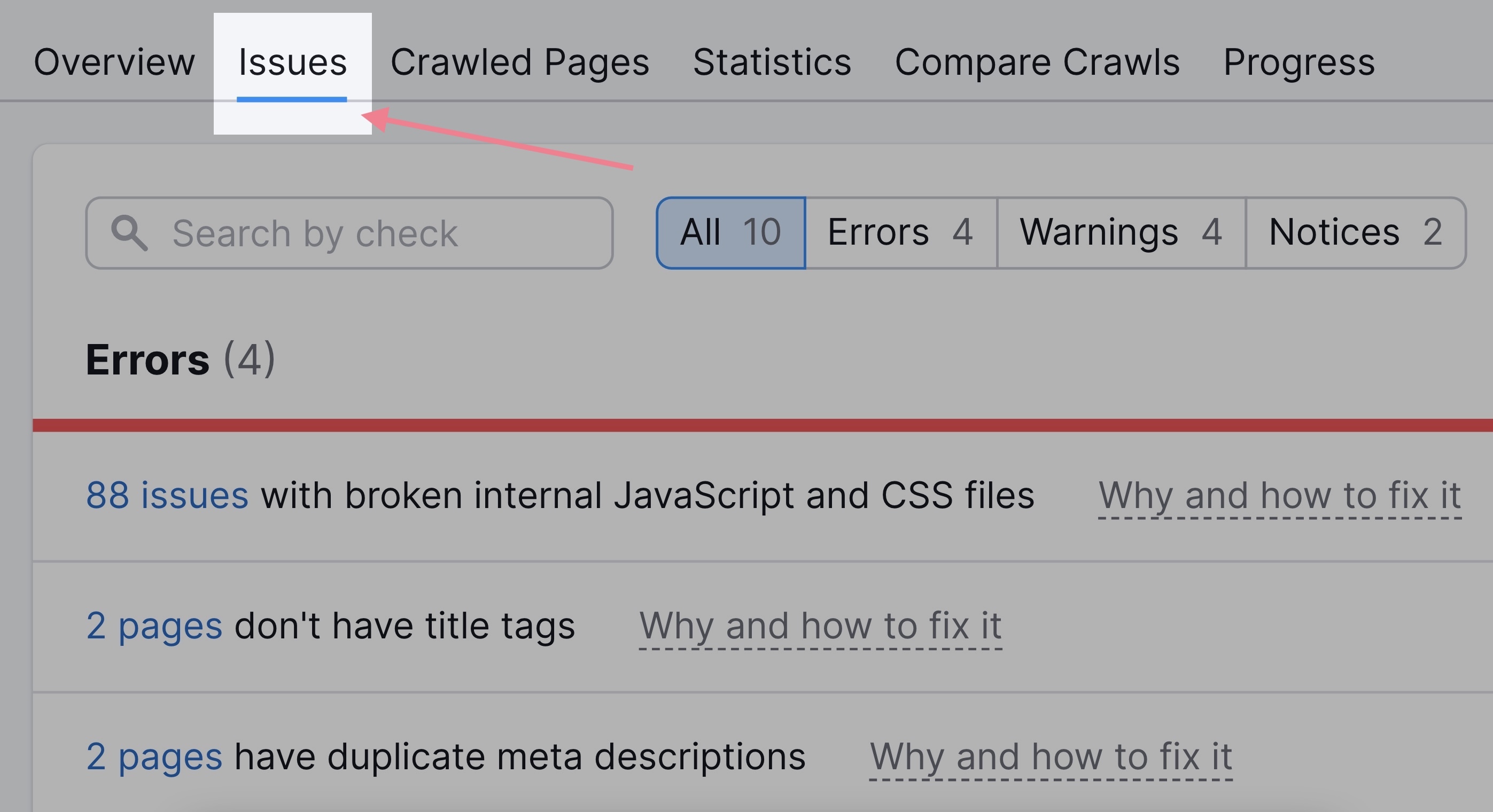

Errors / Warnings / Notices

View the “Errors,” “Warnings,” and “Notices” categories to determine what issues to prioritize.

Common Errors To Prioritize

404 Errors

A 404 error means a visitor is trying to reach a webpage that cannot be found on your site. This most commonly occurs from a broken link, a deleted page without a redirect, etc.

Search engine crawlers flag 404 errors, and they impact usability and trust. No one likes to land on a 404 page, not even a search engine. Run a monthly audit and fix these.

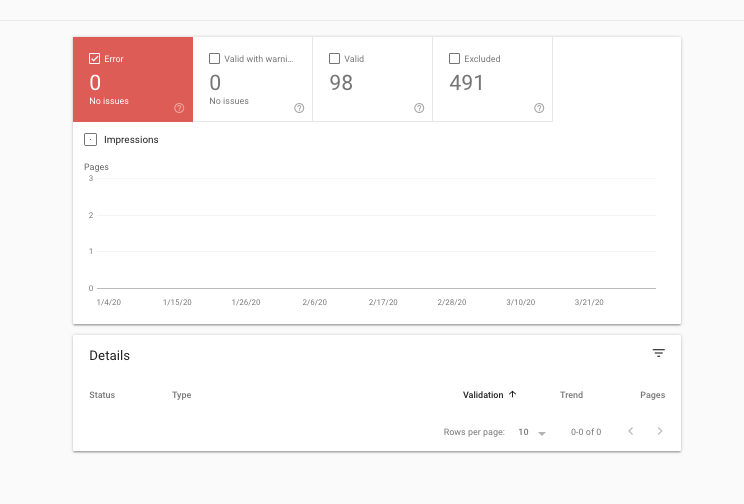

To do so, use Google Search Console. Crawl errors are shown at the site level in the new Index Coverage report (previously the Crawl Errors Report) and the individual URL level in the new URL Inspection tool. These reports help prioritize the severity of the issue, and group pages by similar issues to help you find common underlying causes.

Here’s what it looks like in Search Console.

Typically, setting up a 301 redirect will fix a 404 error. In doing this, you’re diverting the traffic from that link to a better one. A 301 redirect is an appropriate solution if you no longer need a page, deleted one, or if an external site is linked to the wrong page. It maps viewers to the page you intended them to visit.

Broken Internal Links

Broken internal links lead users from your website to another page or external site that doesn’t exist. Multiple broken links negatively affect user experience and may worsen search engine rankings. Crawlers may think that your website is poorly maintained or coded.

To fix this issue, follow all links reported as broken.

If a target webpage returns a 404 error, remove the link leading to the error page and then link to another page.

Duplicate Content

Duplicate content annoys your visitors and trips up search engine algorithms, causing errors in your SEMRush dashboard. When the spiders find duplicate content, they don’t know which to rank or index, which to give authority to, or if it should be split between them.

Duplicate content can be created in several ways, both intentionally and unintentionally. Either way, it is a problem for good technical SEO. Examples include URL variations, different site versions (www.site.com” and “site.com”), or scraped or copied content. Sometimes, scammy sites will use bots to scrape content and add it to their own, hurting your site.

Find duplicate content with SEMRush’s Site Audit Tool.

In the Dashboard of your initial Site Audit, you will see the Issues tab:

enter “duplicate” in the search bar above the list of technical issues.

Searching for issues containing the”duplicate” word in the Site Audit tool.

If your domain has any duplicate pages, you’ll see a “Why and how to fix it” link in the same line.

Site Audit flags pages as duplicate content if their content is at least 85% identical. It also flags duplicate titles and meta descriptions.

For instance, if many pages are using the same keyword. You will want to strategize whether it makes sense to merge this content into one piece or 301 redirect all the duplicates to the most authoritative version.

Another common reason a site has duplicate content is if you have several versions of the same URL.

For example:

- An HTTP version

- An HTTPS version

- A www version

- A non-www version

For Google, these are different versions of the site. So, if your page runs on more than one of these URLs, Google will consider it a duplicate.

To fix this issue, select a preferred version of your site and set up a sitewide 301 redirect. This will ensure only one version of your pages is accessible.

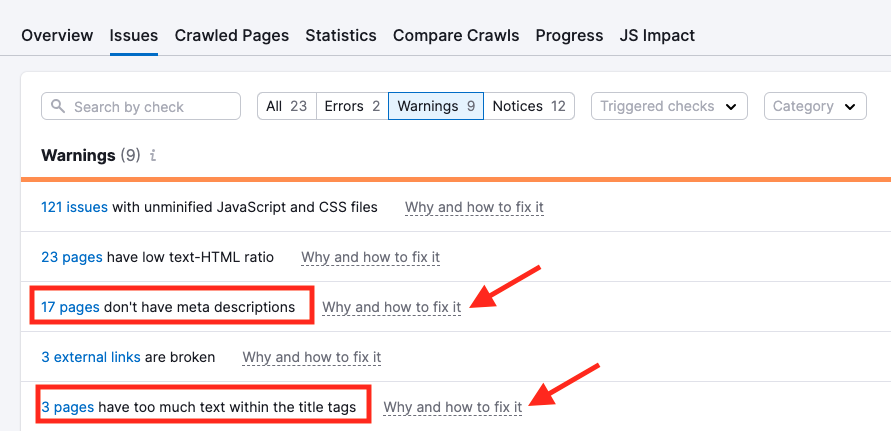

Title Tags & Meta Descriptions

Some issues, like duplicate meta titles and descriptions, can be fixed independently, but some may require a more robust content strategy to resolve.

Log in to your web content manager to easily fix length or missing description warnings for these.

Site Performance

Optimize Your Site Speed

You want a fast website. In the SEO world, “time-to-first-byte” or “TTFB” correlates highly with rankings. It’s the time a browser needs to load the first byte of your web page’s data. You want your TTFB to be low.

Why? Because 40% of people will close a website if it takes longer than three seconds to load. Further, 47% of polled consumers expect a page to load within two seconds.

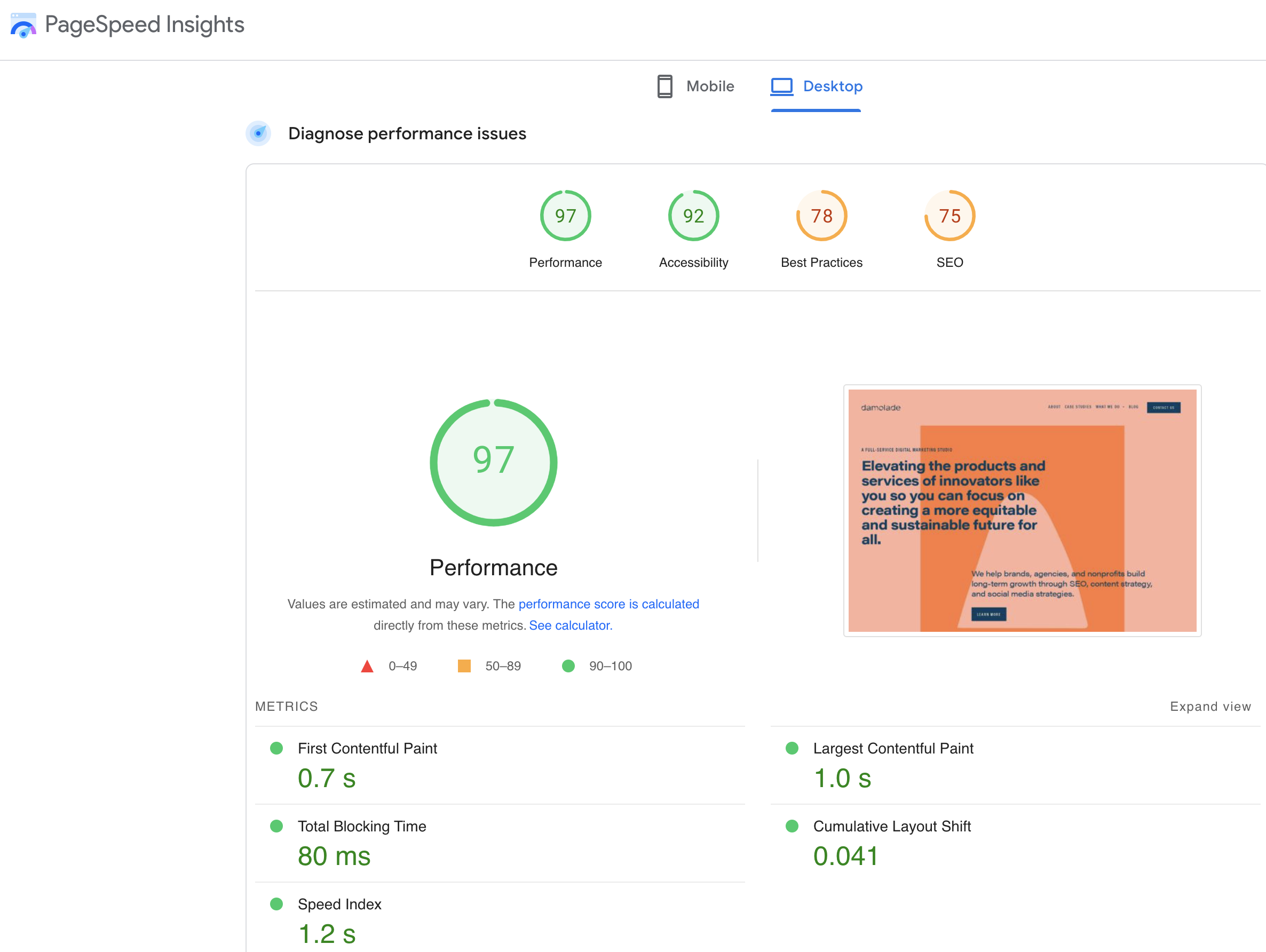

Use Google’s Page Speed Tool to see how fast your site loads and runs.

One contributing factor to site speed is fast hosting. Do your research before committing to a host. Here are some ranked hosts for online stores.

Other tactics for optimizing your site’s speed include:

- Use a fast DNS (domain name system) provider like Cloudflare or WordPress.com

- Minimize HTTP requests by keeping scripts and plugins to a minimum.

- Use one CSS stylesheet (the code used to tell a website browser how to display your website) instead of multiple CSS stylesheets or inline CSS.

- Compress your images before uploading them into your CMS.

- Try Google’s Lazy Image Loader.

- Condense any files you upload to your CMS. We like Smush for WordPress.

If you’re not good with website backend files, you’ll likely need some help fixing some of the issues from the diagnostics report.

Take a Mobile-Friendly and Mobile-First Approach

Considering that more than 50% of web traffic today comes from mobile, it’s fair to say that nearly every website should take a mobile-first approach, and every website should be mobile-friendly. That means responsive design, navigation that makes sense in mobile browsers, fast load times, no weird pop-ups on mobile, etc.

Note that Google sunsetted the mobile usability report in December 2023, and it’s no longer available in Google Search Console, but page speed remains a crucial ranking factor in Google’s algorithm.

Lighthouse from Chrome and Google’s Page Speed Insights tool are the most robust resources for evaluating mobile-friendliness.

If you are not web technical, you will likely need your web developer or designer to help you implement the results of these tools.

AMP

Another way to improve site performance on mobile devices is to use Accelerated Mobile Pages (AMPs), which are stripped-down versions of your pages.

AMPs load quickly on mobile devices because Google runs them from its cache rather than sending requests to your server.

I’d only recommend implementing AMP on a blog that’s already generating high traffic and seeing a solid conversion rate from that content. Read more about AMP

Check Links to Your Site

Depending on what link-building activities you’ve engaged in in the past, you could potentially have some backlinks that are hurting your rankings. Use Google Search Console or SEMRush to run a backlink report. Go through the report and check on any links that look suspicious and try and get them removed. Here’s where to find backlink reports in Google Search Console:

Again, here’s where some additional software could come in handy. If you find a bunch of shady links pointing to your site, you should consider SEMRush to help you manage the process of getting them removed.

Schedule Audits

About every three months, look at historical site performance (this quarter, the last 12 months, and all-time) to see how the current performance of your content compares to past performance. For the sake of this article, I’m defining performance by visits from organic search and search engine page rankings. If you have numbers that are down for a certain goal or objective, it’s time to dig a little deeper there.

Content auditing on a schedule is important because catching issues early can mean avoiding major dips and penalties in search engine rankings. I have included a schedule in the free swipe file below.

If you’re ready to take this on for yourself, enter your email below and receive the swipe file that includes: Bonus on-page SEO techniques, a how-to on using the information architecture template, plus a spreadsheet template demonstrating how to do an SEO Content Audit & Information Architecture Health Check.

Final Thoughts

We know we just threw a mountain of information at you. If you have any questions about technical SEO or are looking for help getting your site into shape, contact us any time.

Great job with this article! How can i determine if Structured Data is the right option for my blog?

What is the URL of your blog? I am happy to take a look. Structured data is very helpful for all types of content but especially helpful if you have a local business, for location markup; menu items as in the example of a restaurant or recipe; or ratings or reviews. If you are on WordPress there are plugins you can use too!